Think about the last time you asked an AI to do something specific. Maybe you wanted it to write emails in your company’s tone or format data exactly like your spreadsheet.

You probably typed detailed instructions, got something close but not quite right, then spent another 20 minutes tweaking the prompt.

Here’s the thing: you were probably missing the simplest trick in the book. Instead of explaining what you want, you could just show it.

What Is Few-Shot Prompting?

Few-shot prompting is when you give an AI model a few examples of what you want before asking it to do the task. That’s it. You show it two or three samples, then let it figure out the pattern.

Let’s say you want the AI to categorise customer feedback. Instead of writing a paragraph explaining your categories, you’d do this:

“The product arrived damaged” → Complaint

“Your team was super helpful” → Praise

“Does this come in blue?” → Question

Now categorise: “Shipping took three weeks” →

The AI sees the pattern and knows you want it to sort feedback into those three buckets. What’s interesting here is that you’re not teaching it what complaints or questions are. It already knows. You’re just showing it how you want things organised in your specific context.

This approach differs from zero-shot prompting, where you just give instructions without examples. Few-shot techniques help the model adapt to your exact format, tone, or structure faster than explaining everything in words. It’s like showing someone how to fold a paper airplane instead of describing each crease.

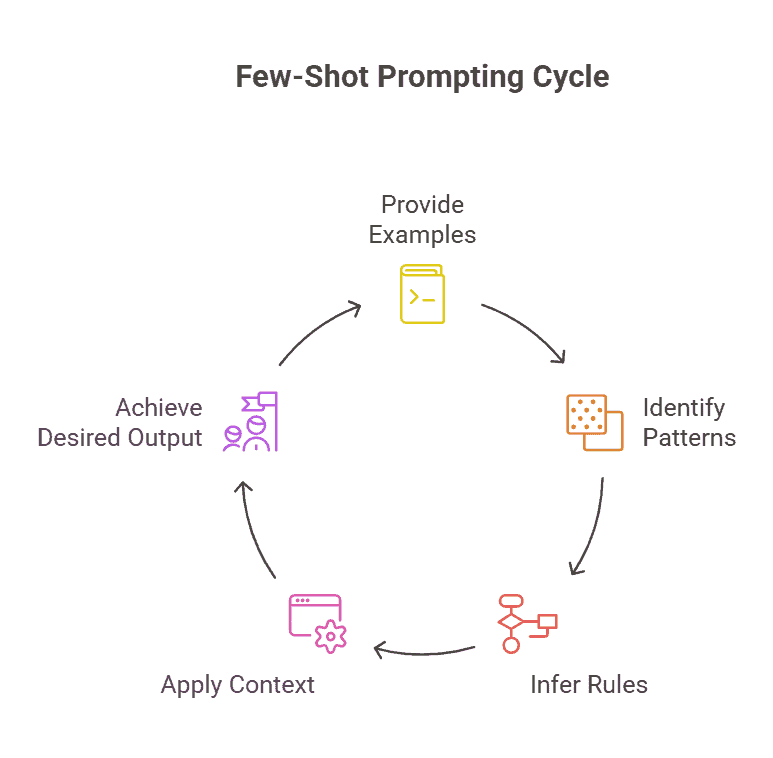

How Does Few-Shot Prompting Work?

Here’s what happens when you feed examples to an AI. The model scans through each example you provide and starts spotting patterns. It looks at the input-output pairs and builds a mental map of what you want.

Think of it like showing a kid how to tie shoelaces. You don’t hand them a manual. You do it once, twice, maybe three times. They watch your hands, notice the loop, the pull, the tuck. That’s pattern recognition.

The AI does something similar. When you give it examples, it identifies the structure, tone, and format you’re after. It’s not memorising your examples word-for-word. It’s inferring rules from what you show it.

The thing is, context matters big time here. The model uses your examples as a reference frame for the task ahead. If your examples are about translating casual English to formal tone, the AI picks up on that shift. It then applies the same transformation to new text you throw at it.

Why does this beat lengthy instructions? Simple. Instructions tell the AI what to do. Examples show it how. And for complex tasks like mimicking a writing style or formatting data in a specific way, showing beats telling every time.

That’s why few-shot prompting remains one of the top prompt engineering techniques used today. It taps into how these models actually learn from context instead of fighting against their design.

Few-Shot vs Zero-Shot vs One-Shot Prompting

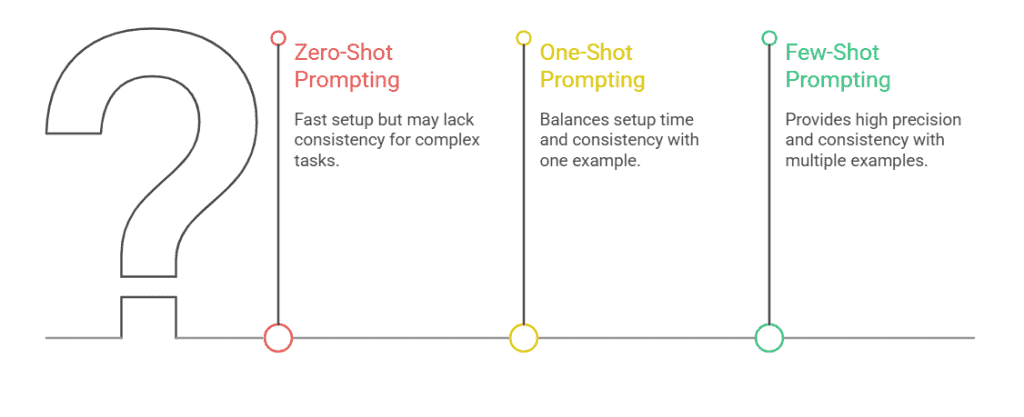

The difference between these three approaches comes down to how many examples you give the AI. Let’s say you want to categorise customer feedback as positive, negative, or neutral. Here’s how each method handles that.

Zero-Shot Prompting

You give the AI zero examples. Just instructions.

Your prompt: “Categorise this feedback as positive, negative, or neutral: The app crashes every time I try to save.”

The AI figures it out from what it already knows. Zero-shot prompting works fine for straightforward tasks, but according to research on prompt engineering techniques, zero-shot can struggle when you need consistent formatting or specific criteria. It’s fast to set up, though. No examples to write.

One-Shot Prompting

In one-shot prompting, you show the AI one example before asking it to perform the task.

Your prompt: “Categorise feedback as positive, negative, or neutral.

Example: ‘The new update is amazing’ = Positive

Now categorise: The app crashes every time I try to save.”

That single example gives the AI a clearer picture of what you want. It’s the middle ground. Takes a minute to create one good example, but you get better consistency than zero-shot.

Few-Shot Prompting

In few-shot prompting, you provide multiple examples so the AI spots the pattern.

Your prompt: “Categorise feedback as positive, negative, or neutral.

Example 1: ‘The new update is amazing’ = Positive

Example 2: ‘It’s okay but nothing special’ = Neutral

Example 3: ‘Worst experience ever’ = Negative

Now categorise: The app crashes every time I try to save.”

Those three examples show the AI exactly how you judge sentiment. The tradeoff? You use more context space and need time to craft good examples. But when you need precision and consistency across hundreds of tasks, that setup time pays off.

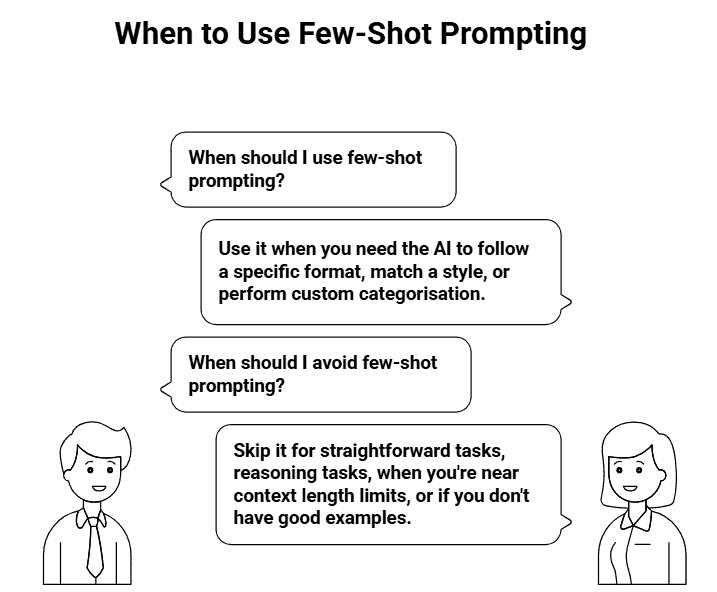

When To Use Few-Shot Prompting

You want few-shot prompting when you’re asking the AI to follow a specific format or structure. Let’s say you’re extracting customer feedback into a standard JSON format with specific field names. Showing 2-3 examples of how you want that output structured will get you there faster than writing paragraph-long instructions.

It’s also your go-to when you need to match a particular style or tone. If you’re generating social media captions that need to sound like your brand, maybe quirky with specific emoji patterns, examples teach the model what “your voice” actually means. Instructions alone rarely capture those nuances.

Here’s where it really shines: custom categorisation schemes. If you’re sorting support tickets into “Billing – Refund,” “Technical – Login,” and other categories only your team uses, examples show the AI exactly how to make those judgment calls.

The thing is, few-shot works best when consistency matters across multiple outputs. You’re not just generating one thing; you’re processing dozens of product descriptions, customer emails, or data entries that all need to follow the same pattern.

When Not To Use Few-Shot Prompting

Skip it for straightforward tasks where simple instructions work fine. If you’re asking, “Summarise this article in 3 sentences,” you don’t need examples. You’re just adding unnecessary context.

But here’s the catch that surprised us: Few-shot can actually hurt performance on reasoning tasks. According to recent research on reasoning models, techniques like few-shot prompting can degrade performance when the AI needs to think through complex problems. The examples sometimes constrain the model’s reasoning process instead of helping it.

You’ll also want to avoid it when you’re bumping against context length limits. Each example eats up tokens, space you might need for actual task content. If your prompt is already long, examples become expensive real estate.

And honestly? If you don’t have good examples, don’t force it. Bad or inconsistent examples teach the model the wrong patterns. Better to stick with clear instructions than mislead with mediocre demonstrations.

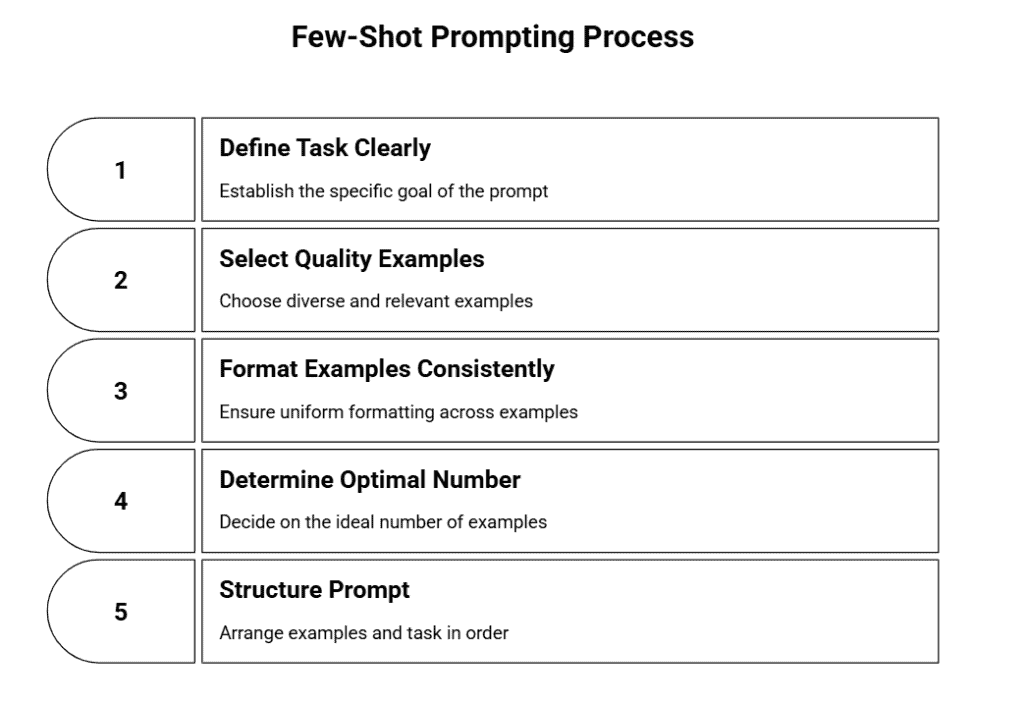

How To Use Few-Shot Prompting (Step-by-Step)

Here’s the thing: few-shot prompting isn’t complicated, but getting it right matters. Follow these five steps to create prompts that actually work.

Step 1: Define Your Task Clearly

Before you grab examples, nail down what you want. Are you asking the AI to classify sentiment, extract data, or write in a specific style? Get specific. Instead of “help me write better emails,” try “write professional follow-up emails to clients who haven’t responded in two weeks.” The clearer your task definition, the easier it becomes to pick examples that match. You’re basically setting the rules of the game before showing how to play it.

Step 2: Select Quality Examples

Your examples shape everything the AI produces, so choose carefully. Pick ones that cover different scenarios your task might encounter. If you’re classifying customer feedback, include examples of positive, negative, and neutral responses. Also, grab edge cases, the tricky ones that could confuse the model.

A complaint disguised as a compliment, for instance. When crafting effective examples, diversity matters more than quantity. One solid tip: test your examples yourself first. If you can follow the pattern, the AI probably can too.

Step 3: Format Examples Consistently

This is where most people mess up. Every example needs identical formatting. If your first example uses “Input: / Output:” labels, all of them should. If you separate sections with line breaks, do it the same way each time. The AI looks for patterns, and inconsistent formatting breaks that pattern recognition. Even small changes, like switching from colons to dashes, can confuse the model.

Step 4: Determine The Optimal Number of Examples

You might think more examples equal better results, but that’s not always true. Most tasks work best with 2-5 examples. Two examples help the AI spot a pattern. Three to five examples reinforce it without overwhelming the prompt. Go beyond that and you’re eating up your context window without much benefit. Start with three examples. If results are shaky, add one or two more. If they’re already solid, you’re done.

Step 5: Structure Your Prompt

Put your examples first, then add your actual task at the end. This order lets the AI learn the pattern before applying it. Here’s the layout: your examples (with consistent formatting), then a clear instruction like “Now classify this customer review,” followed by the new input. Keep it clean. No extra explanation between examples, just let the pattern speak for itself. That structure gives the AI everything it needs to deliver what you want.

Few-Shot Prompting Examples

Text Classification

Let’s say you’re sorting customer support tickets by urgency. Here’s how you’d set it up:

Prompt:

Classify the urgency of customer messages as “High,” “Medium,” or “Low.”

Message: “My payment went through twice and I need a refund immediately.”

Urgency: High

Message: “I have a question about your return policy for next month.”

Urgency: Low

Message: “The app crashes every time I try to upload a photo.”

Urgency: Medium

Message: “I can’t log into my account and I have a meeting in 30 minutes.”

Urgency:

The model learns your urgency criteria from the examples. You’re showing it what “High” looks like (financial issues, time-sensitive) versus “Low” (general questions, no rush).

Content Generation

Say you’re writing product descriptions that need a specific tone. Here’s what that looks like:

Prompt:

Write product descriptions in a friendly, benefit-focused style.

Product: Wireless Earbuds

Description: Your commute just got better. These earbuds block out subway noise so you can actually hear your podcast. Plus, the battery lasts your entire workday.

Product: Standing Desk

Description: Your back will thank you. Switch between sitting and standing without breaking your focus. Takes 30 seconds to adjust, fits any workspace.

Product: Smart Thermostat

Description:

You’re teaching the AI your brand voice through examples. Notice how each one starts with a benefit, keeps sentences short, and speaks directly to the reader’s life.

Data Extraction

You need to pull specific details from messy text. Here’s how:

Prompt:

Extract the meeting date, time, and attendees from these messages.

Text: “Hey team, let’s meet Tuesday at 2pm. Sarah and Mike should join.”

Date: Tuesday

Time: 2pm

Attendees: Sarah, Mike

Text: “Conference call scheduled for March 15th, 10:30am with the design team.”

Date: March 15th

Time: 10:30am

Attendees: design team

Text: “Can we do a quick sync tomorrow morning around 9? Need you and Alex there.”

Date:

Time:

Attendees:

The examples show the AI exactly what format you want. It learns to handle different phrasings while maintaining consistent output structure.

Code Generation

You want code written in your team’s specific style. Here’s the setup:

Prompt:

Write Python functions with descriptive names, type hints, and inline comments.

Task: Check if a number is even

Code: def is_even_number(num: int) -> bool:

# Return True if number is divisible by 2

return num % 2 == 0

Task: Calculate rectangle area

Code: def calculate_rectangle_area(width: float, height: float) -> float:

# Multiply width by height to get area

return width * height

Task: Convert temperature from Celsius to Fahrenheit

Code:

Your examples define the coding standards. The AI picks up on naming conventions, comment style, and type annotation patterns.

Best Practices For Few-Shot Prompting

Run your examples through the AI yourself before handing them off to anyone else. What looks clear to you might confuse the model, and you’ll only catch that by testing. If the output feels off, tweak your examples until it clicks.

Treat your examples like living documents. As you see what actually works in practice, swap out weaker examples for stronger ones. The first set you create rarely ends up being the best set.

Here’s something that trips people up: they pull examples from whatever’s easiest to find. But your examples need to match the real scenarios you’re dealing with. If you’re writing product descriptions for tech gadgets, don’t use clothing examples just because they’re simpler.

Balance matters too. You want enough variety that the model doesn’t just memorise one pattern, but not so much that it gets confused about what you’re actually asking for. Two to four examples usually hits that sweet spot.

Watch your outputs closely, especially at the start. If something’s not working, it’s usually your examples sending mixed signals. Refine them based on what you’re seeing, not what you think should work.

Common Mistakes To Avoid

Mixing examples from different domains tanks your results. One example about customer service emails and another about technical documentation? The model won’t know which style or structure to follow. Stick to one context.

Inconsistent formatting between examples creates chaos. If your first example uses bullet points and the second uses paragraphs, the AI won’t know which format you actually want. Keep the structure identical across all examples.

Cramming in too many examples backfires. You’re eating up context space and potentially confusing the model with information overload. More isn’t always better here.

Examples that contradict each other are worse than no examples at all. If one example is formal and another is casual, you’re basically telling the AI to do two opposite things. That’s on you, not the model.

Skipping the test run before you scale is how you waste time and money. One quick test with your examples can save you from processing hundreds of bad outputs.

The thing about few-shot prompting is that it gets better the more you use it. Start small, test what works, and build from there. Your first attempts won’t be perfect, and that’s completely normal.

A startup consultant, digital marketer, traveller, and philomath. Aashish has worked with over 20 startups and successfully helped them ideate, raise money, and succeed. When not working, he can be found hiking, camping, and stargazing.

![Few Shot Prompt Generator [Free & AI Powered] AI Few Shot Prompt Generator](https://www.feedough.com/wp-content/uploads/2025/02/AI-Few-Shot-Prompt-Generator-150x150.webp)

![Zero Shot Prompt Generator [Free & AI Powered] AI Zero Shot Prompt Generator](https://www.feedough.com/wp-content/uploads/2025/02/AI-Zero-Shot-Prompt-Generator-150x150.webp)