Today, when AI is taking over the world, ChatGPT is the pioneer that made everyone believe in artificial intelligence’s true power and potential. You don’t just talk to it like a human-like friend anymore; you make it do advanced tasks like generate texts, answer questions, write code and even create images.

But how can a machine do almost all the textual (and image) tasks a human can do? How does ChatGPT work? How was it built? Let’s dive into the technicalities of ChatGPT and understand its intricacies.

What is ChatGPT?

ChatGPT stands for “Chat Generative Pre-trained Transformer” and is an artificial intelligence-based system that uses natural language processing (NLP) to create both text and image conversation responses.

It is essentially an advanced chatbot that uses deep learning algorithms to understand and generate human-like responses. This means it can understand context, tone, and sentiment, making conversations more natural and personalised.

But ChatGPT isn’t the base technology. It is a product of OpenAI built as a chatbot over the GPT-3 (Generative Pre-trained Transformer) model. The GPT model is the deep learning algorithm allowing ChatGPT to generate human-like responses. It stands for Generative Pre-trained Transformer –

- Generative: It can generate text and images based on the given input. That is, it’s a generative AI that is capable of generating human-like responses.

- Pre-trained: The model has been trained on a massive amount of text data to learn how language works. This training process took place using unsupervised learning techniques, meaning the model learns and improves on its own without being explicitly taught.

- Transformer: A transformer is an advanced deep-learning architecture that uses attention mechanisms to understand the context of a given text input. This allows the model to generate coherent and relevant responses to the conversation. In simple terms, a transformer can understand relationships and connections between words in a sentence like us humans. It does so by paying attention to relevant words and ignoring irrelevant ones.

ChatGPT Basics

Before understanding and discussing how ChatGPT works, let’s get introduced to some of its basic terminologies and concepts.

- Large Language Model (LLM): A large language model is a deep learning-based AI model that has been trained on massive amounts of text data, such as books, articles, and conversations. ChatGPT is a great example of an LLM. The primary purpose of these models is to generate human-like responses, usually in the form of text or images.

- Natural Language Processing (NLP): NLP is a branch of artificial intelligence that focuses on enabling computers to understand, interpret, and generate human language. ChatGPT uses NLP techniques to process input text and generate coherent responses.

- Predictive Text Model: A predictive text model is an AI model that uses contextual language processing to anticipate and generate text inputs based on previous words or phrases. Since ChatGPT is a transformer-based model, it uses predictive text modelling to generate relevant and logical responses in a conversation context. For example, when you start typing a sentence on your phone, the keyboard suggests words based on what you have already typed. This is an example of a predictive text model. Similarly, ChatGPT anticipates and generates text based on the conversation context.

- Training: Training is a process in which an AI model learns patterns and relationships from a given dataset. In the case of ChatGPT, the model has been trained on massive amounts of text data to understand language patterns and generate human-like responses.

- Training Data: Training data refers to the dataset used to train a machine learning or deep learning model. In the case of ChatGPT, the training data includes books, articles, and conversations from various sources.

- Tokenisation: Tokenisation is breaking down a sentence or text into smaller units called tokens. In ChatGPT, tokenisation helps understand the structure and context of each input to generate more accurate responses. For example, it breaks down a sentence into individual words and punctuation marks, assigning each of them a unique token (in the form of a unique number). Then, the model uses these tokens to understand the relationships between words and generate an appropriate response.

- Neural Network Development: A neural network is a computer system inspired by the structure and function of the human brain, used to process complex data and perform tasks like pattern recognition, prediction, and decision-making. In the case of ChatGPT, the neural network is responsible for learning from the training data and generating responses based on that knowledge. This process is known as Neural Network Development.

- Reinforcement Learning From Human Feedback (RLHF): RLHF is a type of machine learning technique that uses human feedback to improve and refine the performance of an AI model. In ChatGPT, RLHF is used to finetune the responses generated by the model based on how humans interact with it. This helps in continuously improving the accuracy and naturalness of the responses.

- Multimodal: Multimodal refers to the use of multiple modes or forms of communication, such as text, speech, images or videos. ChatGPT is a multimodal model as it can understand and generate responses using language (text) as well as other media like images.

How ChatGPT Works?

ChatGPT is a mixture of chat-like capabilities and an advanced deep-learning model, GPT.

In simplest terms, here’s how the ChatGPT model works :

- It starts with an input text.

- The model then analyses the text, paying attention to relevant words and understanding the context.

- Based on this analysis, the model generates a coherent and human-like response. The interesting part? GPT doesn’t understand words per se; it converts the words into numbers (the process is called tokenising) and then predicts the next likely word based on those numbers. For example, if you type “I am feeling”, the model assigns a number to each word and predicts the next most likely word based on those numbers. In this case, it could generate something like “happy” or “excited”.

- The process of input-analysis-response continues until a specified length limit is reached.

All this happens in real-time, making it seem like the model is having a genuine conversation with the user. The more input data and conversations the model has, the better it gets at generating human-like responses.

But how did the GPT model achieve such impressive capabilities? It’s because of its dataset and architecture.

How Was ChatGPT Trained?

When it comes to LLM (large language models), the training process includes two main components: data and computation. In the case of ChatGPT, the model was trained on an enormous dataset of text data using supervised learning techniques.

Now, there isn’t one dataset used to train the versions of GPT (3.5 or 4), but rather a combination of various sources such as books, articles, and websites. These include:

- Common Crawl: A repository of web crawl data composed of over 25 billion web pages. This non-profit foundation collected petabytes of free data in over eight years.

- WebText2: An extensive database of webpages extracted from outbound links on Reddit that received at least three upvotes.

- Books1: A large collection of over 11,038 books, totalling approximately 74 million sentences and 1 billion words. The books cover various sub-genres such as romance, historical, adventure, and more.

- Books2: A similar dataset to Books1 containing a random sampling of a small subset of all the public domain books available online, including both classic and modern literature.

- Wikipedia: An online encyclopedia containing nearly 6 million articles and over 4.5 billion words in total.

These large datasets are then fed into powerful computers using deep learning algorithms to train the GPT model. The training process involves going through the data multiple times and adjusting the model’s parameters and weights with each iteration until it can accurately generate human-like responses.

But this isn’t all. The above datasets were used to train GPT 3, one of the early versions of the GPT model. The GPT 3 model wasn’t as conversational or human-like as GPT 3.5 – the model that pioneered ChatGPT. GPT 3.5 was finetuned for conversational use cases. OpenAI did this by releasing a specific dataset like Persona Chat that includes hundreds of thousands of human-to-human conversations.

Besides Persona Chat, OpenAI also used the following datasets to finetune GPT 3.5 to become as human-like as possible:

- Cornell Movie Dialogs Corpus: A dataset containing conversations from movies, including over 220,000 conversational exchanges between more than 10,000 pairs of movie characters.

- DailyDialog Corpus: A dataset containing over 13,000 multi-turn dialogs with human-written summaries. The dataset was created to stimulate day-to-day dialogues that humans normally engage in. It contains different categories of emotions including no emotion, anger, disgust, fear, happiness, sadness, and surprise.

- Ubuntu Dialogue Corpus: A dataset containing over 1 million multi-turn dialogues that were extracted from chat logs in Ubuntu forums.

These datasets not only helped improve the conversational abilities of GPT 3.5 but also made it possible for ChatGPT to mimic a person’s personality based on their given persona information. This means that ChatGPT can generate responses that align with a specific persona, making the conversation more personal and realistic.

How Does ChatGPT Think Like A Human?

Training a model with large amounts of conversation data is not enough to make it human-like. ChatGPT uses deep neural networks and self-attention mechanisms to process the input text, allowing it to capture contextual dependencies and generate appropriate responses.

ChatGPT also implements techniques such as beam search and top-k sampling in order to select the most likely response from a set of generated responses.

All this is done using a process called tokenisation, which breaks down the input text into smaller chunks and assigns them a numerical value.

Here’s a detailed example:

Suppose you input the text: “Hi, how are you?”

The tokeniser breaks it down into individual words and assigns them numerical values:

- Hi: 101

- ,: 102

- how: 103

- are: 104

- you: 105

These numerical values are then passed through the neural network for processing, where contextual dependencies between each word are captured.

The model then generates a list of possible responses, and techniques like beam search or top-k sampling are used to select the most appropriate one.

This process is repeated for each subsequent input, allowing ChatGPT to continuously learn and adapt its responses based on context and previous conversations.

Initially, you got the responses from ChatGPT because of the pre-training (on vast amounts of data). But as you continue to interact with it, it uses RLHF (reinforcement learning with human feedback) to refine its responses and make them more personalised and realistic.

To understand this better, let’s take the example of language translation.

Initially, a machine translation system will give you direct word-to-word translations, which may not always make sense. But as it learns from human feedback and corrections, it starts to understand the nuances of language and produces more accurate translations.

The same principle applies to conversation generation with ChatGPT – the more we interact with it, the more it learns and adapts its responses to match our style of language.

When this process is done at scale, like what OpenAI did with ChatGPT by offering it for free to the public, the model gains a vast amount of human feedback and conversations to improve its responses continuously.

This is why ChatGPT can provide such realistic and personalised conversation experiences – because it has been trained on real human interactions and constantly learns from them.

How ChatGPT Learns From Human Input?

RLHF (reinforcement learning with human feedback) usually works in two stages:

- Pre-training stage – Where ChatGPT is trained on a vast amount of data (text) to build its initial knowledge base and language understanding.

- Human-interaction stage – Where ChatGPT interacts with humans and receives feedback on its responses, allowing it to refine and improve its conversations over time.

ChatGPT uses reinforcement learning techniques during the human interaction stage to analyse human feedback and adjust its response generation accordingly.

It learns from both positive and negative feedback, making its responses more accurate and personalised with each interaction.

This extra training involves three additional rounds:

- Supervised Finetuning (SFT)

- Reward Model

- Reinforcement Learning

Supervised Finetuning (SFT)

Supervised Finetuning (SFT) involves adapting a pre-trained language model to perform a specific task, such as responding accurately to user queries or maintaining a consistent tone. This is done by providing the model with a carefully curated dataset containing input prompts and desired output responses. The resulting SFT model serves as a starting point for subsequent stages of training.

For example, imagine you have a pet that you want to teach to fetch a ball. At first, you show the pet how to do it by guiding them with a leash. This is like providing the language model with labelled data to teach it a specific task.

Reward Model

The reward model evaluates the quality of the generated responses produced by the SFT model. Humans are asked to compare the model’s outputs to determine preferable ones. These comparisons are converted into a numerical reward signal, which guides the model towards producing more desirable responses during the reinforcement learning stage.

For example, after your pet attempts to fetch the ball, you give them a treat if they do it correctly. This is similar to the reward model, which evaluates the quality of the language model’s responses and provides feedback as rewards.

Reinforcement Learning (RL)

Reinforcement Learning (RL) aims to teach the model to make decisions that lead to favourable outcomes without explicitly specifying every possible scenario. By introducing the reward model, the RL algorithm adjusts the model’s parameters to increase the likelihood of receiving positive rewards when generating responses. This helps ensure that the model produces more accurate and appropriate responses.

In the pet example, as your pet continues to practice, they learn to associate fetching the ball with receiving a treat. They adjust their behaviour to increase the likelihood of getting a treat. This is akin to reinforcement learning, where the language model adjusts its responses based on the feedback from the reward model.

Even though this isn’t a proprietary concept, it has proven to be effective in training language models to generate high-quality responses. Several AI development services have utilised reinforcement learning techniques to improve their language processing models.

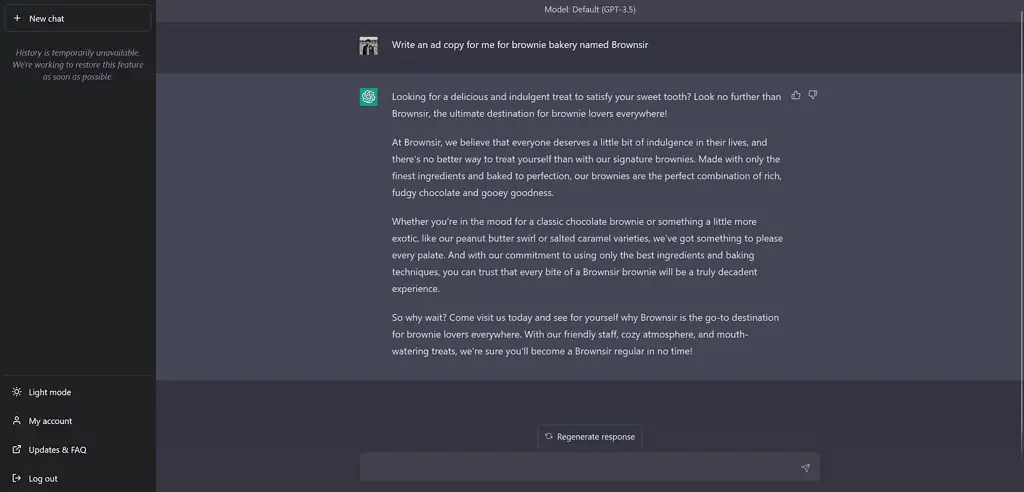

What ChatGPT Can Do?

ChatGPT is amongst the most advanced natural language processing tools available today. It can generate human-like textual output, and now that it is merged with DALL·E 3, it can also generate images based on the text input.

Honestly, there’s no limit to what ChatGPT can do. Its capabilities are continuously expanding as researchers and developers work on improving its performance and integrating it with more advanced models and algorithms.

Here are some potential use cases for ChatGPT:

- Personal assistants: With the ability to understand context and generate human-like responses, ChatGPT can serve as virtual personal assistants that can assist with various tasks and answer questions.

- Creative writing: ChatGPT can be used as a tool for writers to generate ideas or even entire pieces of content. It can also serve as a co-writer, providing suggestions and expanding on the writer’s ideas.

- Language translation: With its ability to understand context and generate natural-sounding responses, ChatGPT can potentially be used for language translation tasks, making the translated text sound more natural and human-like.

- Customer service: ChatGPT can be integrated into customer service chatbots to provide more personalised and human-like responses to customer inquiries and concerns.

- Educational tools: With its ability to generate accurate and appropriate responses based on context, ChatGPT can potentially serve as a tool for educational purposes, such as virtual tutors or study aids.

- Creative AI projects: ChatGPT can be used in various creative AI projects, such as generating art, music, or even video content based on text inputs.

- Text-to-image generation: ChatGPT has native integration of DALL·E 3, a model that can generate images from text descriptions. This opens up possibilities for creating visual aids or illustrations based on written content.

- Coding: ChatGPT can also be used to assist with coding tasks, such as generating code snippets or providing suggestions for completing lines of code.

A startup consultant, digital marketer, traveller, and philomath. Aashish has worked with over 20 startups and successfully helped them ideate, raise money, and succeed. When not working, he can be found hiking, camping, and stargazing.

![The Free ChatGPT Prompt Generator [Unlimited & No Login] Feedough AI Generator](https://www.feedough.com/wp-content/uploads/2018/07/covers-04.png)

![AI Copilot Prompt Generator[Unlimited & No Login] Free Copilot Prompt Generator](https://www.feedough.com/wp-content/uploads/2025/02/Copy-of-Cover-images-3-150x150.webp)