A few years ago, the biggest academic integrity concern was copy-paste plagiarism from Wikipedia. Today, students are generating entire dissertations with ChatGPT, and universities are scrambling to catch up.

Nearly 7,000 UK university students were formally caught cheating with AI tools during the 2023-24 academic year. That’s 5.1 cases per 1,000 students, which means AI cheating rates tripled in just one year.

Here’s what makes this even more complex: 86% of students globally are using AI tools in their studies. The line between legitimate AI assistance and academic misconduct has become incredibly blurry.

This article breaks down the latest AI cheating statistics, detection trends, and what these numbers actually mean for education in 2025.

Key Statistics

AI has fundamentally changed academic integrity. Here’s what the data reveals:

Student Usage Patterns

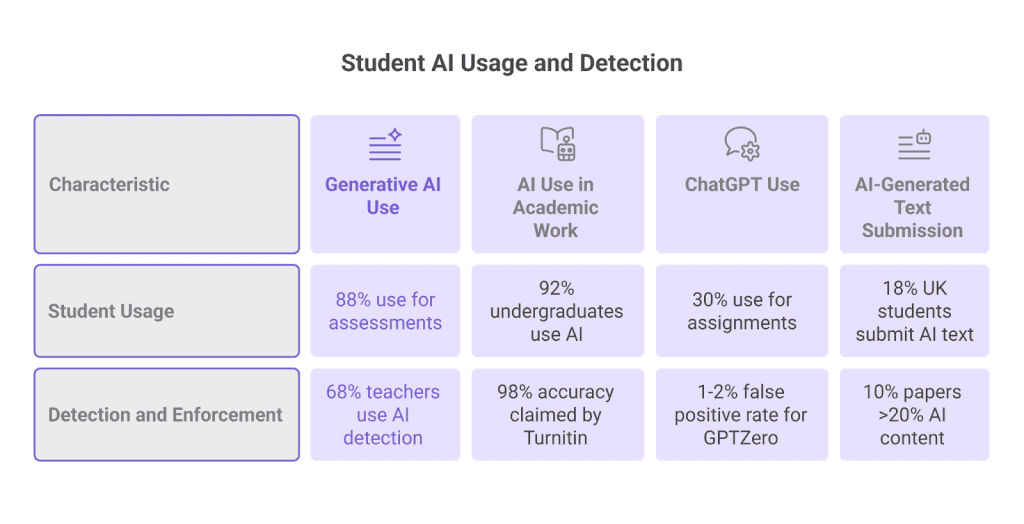

- 88% of students now use generative AI tools for assessments, up from 53% in 2024

- 92% of undergraduate students use AI tools for academic work, up from 66% in 2024

- 30% of students admitted to using ChatGPT specifically for assignments

- 18% of UK undergraduate students admit to submitting AI-generated text in their assignments

Detection and Enforcement

- 68% of teachers use AI detection tools to identify academic dishonesty

- 98% accuracy claimed by Turnitin for AI content detection

- 1-2% false positive rate for GPTZero in standard testing scenarios

- 10% of submitted papers contain more than 20% AI-generated content

Consequences and Discipline

- 5.1 per 1,000 students in UK universities were caught cheating with AI in 2023-24, triple the previous year’s rate

- 64% discipline rate by end of school year, up from 48% at the start

AI Cheating by Student Demographics

The numbers tell an interesting story about who’s turning to AI for academic help. Here’s what the data reveals:

- 45% of ChatGPT users are under 25 years old.

- 64% of ChatGPT users are male, and 36% are female.

- 25% of students use AI tools daily for academic work.

- 54% of students use AI tools weekly.

- An average of 2.1 AI tools used per student in higher education

- 66% of students prefer ChatGPT over other AI tools

- 25% of students use Grammarly as their primary AI writing assistant

What jumps out is how the under-25 crowd has embraced AI tools. Nearly half of all ChatGPT users fall into this age bracket, which makes sense when you consider this generation grew up with smartphones and social media. They see AI as just another digital tool to get things done faster.

The gender split reveals something fascinating about how different groups approach AI. Male students tend to use AI tools more frequently, often for technical tasks like coding or research. Female students, while using AI less overall, typically focus on writing and editing assistance. This explains why tools like Grammarly maintain strong usage rates despite ChatGPT’s dominance.

The usage patterns show AI isn’t just a weekend cramming tool. With 25% of students using AI daily and over half using it weekly, these tools have become part of regular study routines. Students aren’t replacing traditional learning; they’re layering AI on top of it. Think of the student who uses ChatGPT to brainstorm essay topics in the morning, then switches to Grammarly for editing in the evening.

The fact that students use an average of 2.1 AI tools suggests they’re becoming sophisticated users. They might use ChatGPT for research, Grammarly for writing polish, and another tool for math problems. This multi-tool approach shows students are thinking strategically about which AI fits which task best.

AI Detection Tool Accuracy and Limitations

Here’s what the numbers reveal about how AI detection tools actually perform:

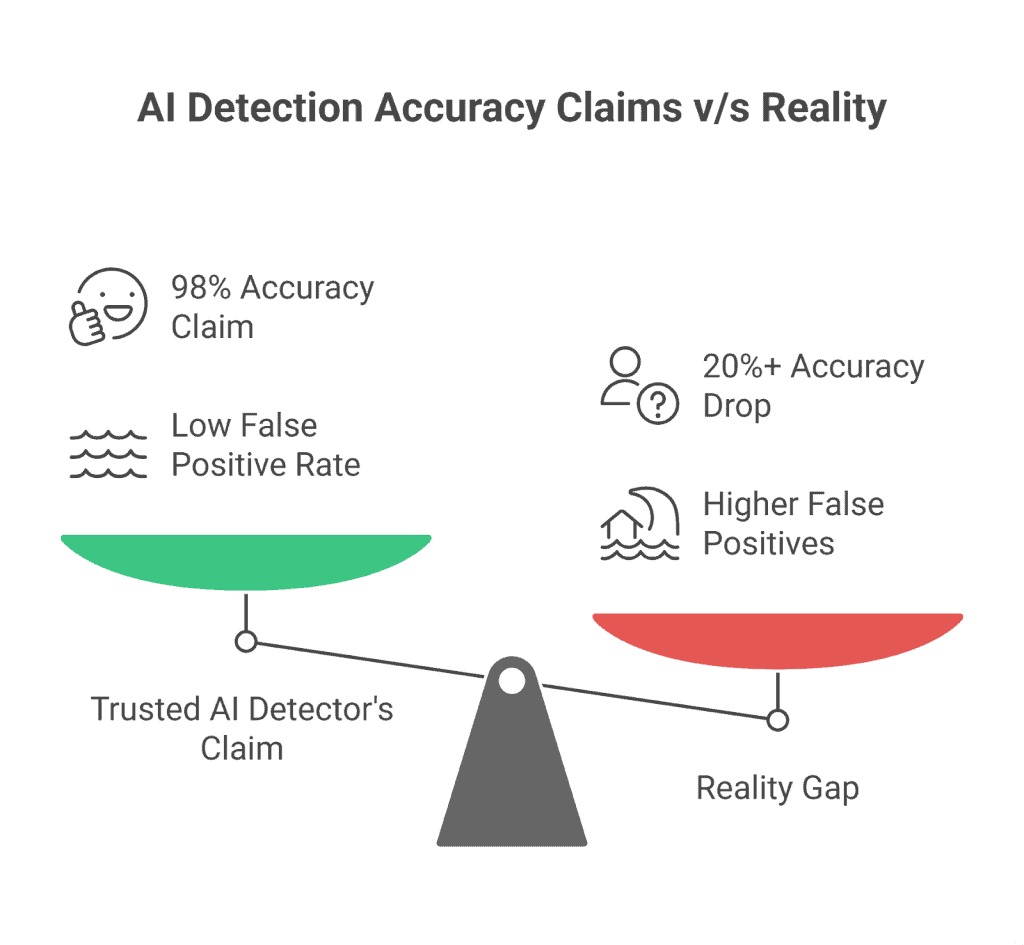

- Turnitin claims 98% accuracy for AI content detection

- GPTZero shows a 1-2% false positive rate in controlled testing environments

- Accuracy drops by 20+ percentage points when AI content gets paraphrased or modified

- 10% of submitted papers contain more than 20% AI-generated content

- Non-native English speakers face significantly higher false positive rates

- The detection tool market is projected to grow from $359.8M (2020) to $1.02B (2028)

The Reality Gap

That 98% accuracy claim from Turnitin looks impressive on paper. But real academic settings tell a different story. When students paraphrase AI-generated content or use simple rewording techniques, these tools struggle to keep up. The 20+ percentage point accuracy drop isn’t just a minor hiccup; it represents thousands of papers slipping through detection.

What’s more concerning is the bias issue. Research shows that AI detectors flagged a disproportionate percentage of essays written by non-native English speakers as AI-generated, even when they were completely human-written. This means international students face unfair scrutiny simply because their writing patterns differ from native speakers.

Why False Positives Matter

A 1-2% false positive rate might sound minimal, but think about what that means at scale. In a university with 20,000 students, that’s potentially 200-400 students wrongly accused of cheating each semester. These false accusations can destroy academic careers and create lasting damage to student-teacher relationships.

The detection tool market’s explosive growth, from $359.8M to over $1B, shows how invested institutions are in this technology. But you have to wonder if they’re buying into a solution that’s not quite ready for the complexity of real academic assessment. The gap between marketing claims and classroom reality remains frustratingly wide.

Institutional Response and Discipline Rates

Schools aren’t just catching more students; they’re actually following through on consequences.

That 64% discipline rate by the end of the school year? It started at just 48% at the beginning of the academic year. This jump shows institutions are getting serious about enforcement as they figure out their approach to AI misconduct.

What’s interesting is how much policies vary between institutions. Some universities treat AI assistance like any other form of plagiarism, applying existing disciplinary frameworks. Others are creating entirely new categories of academic misconduct specifically for AI use.

But institutions are struggling with consistency. A student using ChatGPT to brainstorm ideas might face no penalty at one university and a formal warning at another. This uncertainty is creating anxiety for both students and faculty.

The bigger challenge? Most educators weren’t trained to spot or handle AI cheating. Universities are scrambling to provide professional development on detection tools, policy enforcement, and fair assessment practices.

Some institutions are taking a more collaborative approach. Instead of purely punitive measures, they’re offering “AI literacy” courses where students learn appropriate use of these technologies. This shift recognises that AI skills will be essential in many careers, but students need guidance on ethical boundaries.

The result is a patchwork of responses across higher education. Schools are learning as they go, adjusting policies based on what works and what creates more problems than it solves.

Global AI Cheating Trends and Regional Differences

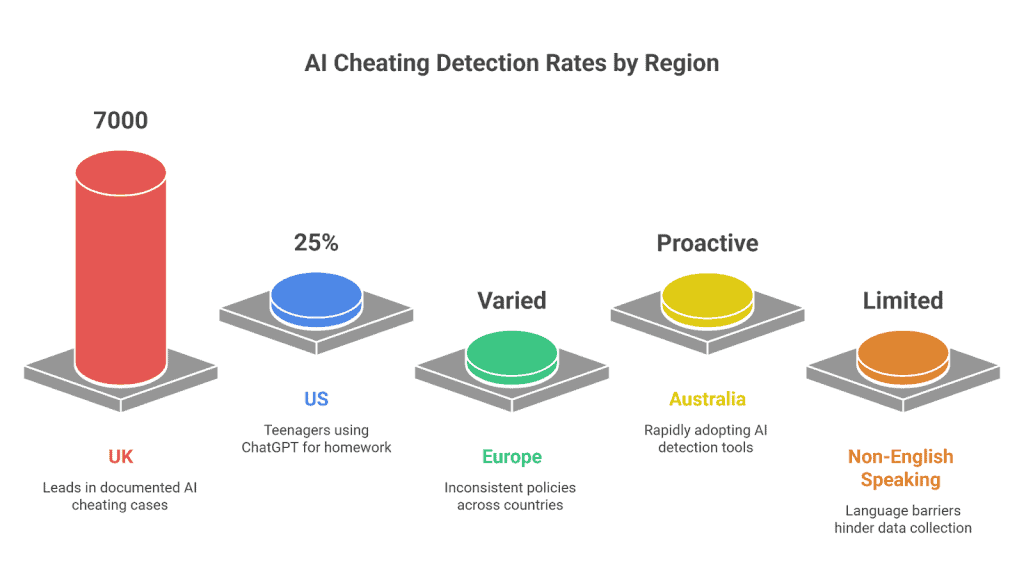

Here’s what might surprise you: the countries catching the most AI cheaters aren’t necessarily the ones with the biggest problem. They’re just the ones looking hardest.

The UK leads in documented cases, with over 7,000 incidents tracked across universities. But that comprehensive tracking reveals something important about detection versus reality. While British institutions have robust monitoring systems in place, other regions might be experiencing similar rates without the infrastructure to catch them.

Take the United States, where one in four teenagers will now use ChatGPT for homework assignments by early 2025. That’s a massive jump from previous years, yet many American schools still lack consistent detection policies. The disconnect between usage and oversight creates a significant blind spot in understanding true misconduct rates.

What’s interesting is how cultural attitudes shape these patterns. In Europe, institutions show growing concern about AI assistance, but responses vary dramatically between countries. Scandinavian universities tend toward educational approaches, while German institutions often implement stricter penalties. This patchwork of policies across the EU creates inconsistent data collection.

Australia represents another approach entirely. Universities there are rapidly increasing adoption of AI detection tools, suggesting they’re preparing for higher misconduct rates rather than reacting to them. It’s a proactive stance that might explain why their reported numbers remain relatively lower.

The bigger challenge lies in non-English-speaking countries, where data remains limited. This isn’t necessarily because AI cheating occurs less frequently. Language barriers in detection tools and different academic integrity frameworks make consistent global tracking nearly impossible.

What these regional differences tell us is crucial: detection rates reflect institutional capacity more than actual misconduct levels. Countries with comprehensive tracking systems naturally document more cases. Meanwhile, the global statistic that 86% of students use AI tools in their studies suggests the practice transcends borders, even if the documentation doesn’t.

This creates a complex picture where regions with lower reported rates might actually have higher unreported incidents. Understanding these differences helps explain why global AI cheating statistics can seem contradictory depending on the source.

Impact of AI Cheating

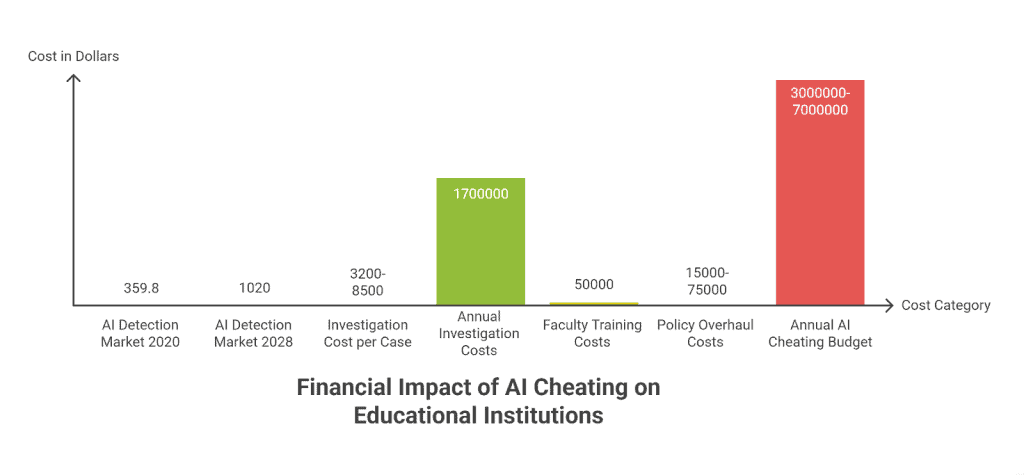

When students use AI to cheat, someone always pays the bill. Educational institutions are discovering that academic dishonesty powered by artificial intelligence comes with a hefty price tag that extends far beyond the cost of catching cheaters.

The financial burden starts with detection. Remember that AI detection tool market we mentioned? It’s exploding from $359.8 million in 2020 to a projected $1.02 billion by 2028. That’s not just growth; it’s institutions scrambling to keep up with increasingly sophisticated cheating methods.

But detection software is just the tip of the iceberg. Each misconduct investigation costs educational institutions an average of $3,200 to $8,500 when you factor in administrative time, legal reviews, and academic committee proceedings. A single large university handling 200 cases annually faces investigation costs alone reaching $1.7 million.

The hidden costs run deeper. Institutions are redirecting substantial portions of their budgets toward staff training programs, with some colleges spending upward of $50,000 annually to educate faculty on identifying AI-generated work. Technology departments are stretched thin implementing new detection systems and updating existing plagiarism protocols.

Then there’s reputation damage. When high-profile cheating scandals break, universities face declining enrollment numbers that translate directly to lost revenue. A 2023 study found that institutions experiencing publicised academic integrity breaches saw average enrollment drops of 8-12% over the following two years.

Policy development adds another layer of expense. Universities are hiring specialised consultants and legal experts to craft AI-specific academic integrity policies, with costs ranging from $15,000 to $75,000 per comprehensive policy overhaul.

The math is stark: mid-sized universities now allocate between 3-7% of their annual operating budgets to combat AI cheating through detection, investigation, and prevention measures. For a university with a $100 million budget, that’s potentially $7 million annually dedicated to addressing artificial intelligence misconduct.

What started as students using free AI tools has evolved into a multi-million dollar challenge that’s reshaping how educational institutions allocate resources and plan their budgets.

Future Trends and Projections

The current 86% usage rate of AI tools among students isn’t just a number; it’s a preview of tomorrow’s academic landscape. You’re looking at a generation that considers AI assistance as natural as using a calculator, and that mindset is reshaping everything about how education works.

The trajectory is clear. AI tool adoption will hit near-universal levels within the next two years. What started as early adopters experimenting with ChatGPT has become mainstream student behaviour. The bigger shift? Students are getting more sophisticated about how they use these tools.

Detection technology is racing to keep up, but it’s playing catch-up in a game where the rules keep changing. You’re seeing a classic arms race developing. As detection tools improve, AI becomes better at mimicking human writing patterns. The result? Detection accuracy rates that fluctuate wildly as both sides evolve.

Academic institutions are starting to realise something important. Traditional assessment methods, the ones built around individual written work under controlled conditions, are becoming less relevant. You’re already seeing early adopters shift toward collaborative projects, oral examinations, and real-time problem-solving assessments.

Policy changes are happening faster than anyone expected. Universities that banned AI tools six months ago are now developing “AI acceptable use” guidelines instead. The conversation shifted from “How do we stop this?” to “How do we work with this reality?”

Here’s what you can expect in the next three to five years. Digital literacy will become as fundamental as basic writing skills. Students will need to understand not just how to use AI, but when it’s appropriate and how to maintain their own learning process alongside it.

The long-term picture suggests a complete redefinition of academic integrity. Instead of focusing solely on preventing AI use, institutions will likely develop new frameworks that distinguish between AI assistance and AI dependence. You’re moving toward a world where the question isn’t whether students use AI, but whether they can think critically with and without it.

A startup consultant, digital marketer, traveller, and philomath. Aashish has worked with over 20 startups and successfully helped them ideate, raise money, and succeed. When not working, he can be found hiking, camping, and stargazing.